What is Resonance System Design?

Resonance System Design (RSD) is an interdisciplinary field of research that focuses on the design of interfaces, interactions and systems that bring humans and artificial intelligence together in a synergistic way. It aims to utilise the strengths of both sides to develop creative, productive and ethically robust solutions to complex problems (Shneiderman, B. (2020). Aspects of cognitive science, UX design, ethics and AI research are integrated.

The following points are initially proposed as the main points of Resonance System Design Thinking:

• Focus on people

• Making collective visions visible

• Service prototyping

• Creative idea generation

• Development of effective solutions and perspectives

Introduction

The fundamental premise of this concept is the observation that intricate, dynamic systems such as a democracy cannot be adequately addressed for all stakeholders and marginalised groups by individual political ideologies. The challenge appears to be that complex dynamic systems necessitate the implementation of parallel solutions that align with the specific circumstances of the various communities (resonance spaces) and must be tailored to their unique contexts. Consequently, the most significant challenge is to construct the system processes and information channels in a manner that aligns with the characteristics of the desired collective development and the potentialities and visions of a resonance space. This implies that the primary objective is to ascertain which common and individual perspectives, guiding principles and visions are evoked and presented in a resonance space.

In particular, the modelling of complex dynamic systems, such as the human being as an individual and within a group, community or population, is crucial for facilitating a sense of connectedness and security to the system, as well as offering potential for individualised development. However, this feeling is always embodied, embedded, extended and enactive, according to the views of 4E cognition.

This cognitive logic already demonstrates that conventional modelling is unable to provide an adequate explanation, given that every individual and every community has highly specific requirements and needs. Consequently, any reduction in complexity typically results in an insufficient representation of the complete reality.

One approach to understanding complex dynamic systems is to examine the scientific phenomena that determine such systems. This model considers and discusses the phenomena of entropy, synchronisation and emergence, despite the lack of a precise scientific connection between these two phenomena. The Belousov-Zhabotinsky reaction, for instance, demonstrates that stable emergent patterns can also emerge when the overall entropy in a system increases.

The development of artificially intelligent systems presents a potential avenue for leveraging these intricate phenomena for the advancement of resonant spaces, such as the equalization of echo chambers. Nevertheless, despite the considerable efforts of numerous researchers and companies around the globe in the field of AI and AGI, a unified conceptual framework and design that facilitates the harmonious co-evolution of human-human and human-machine interactions remains elusive. This paper discusses an approach to comprehensive human-AI design based on the concept of World Models (LeCun, 2022). The approach is geared towards achieving the UN Sustainable Development Goals (SDGs), considers potential threats and utilises the principle of social entropy to promote prosperous and peaceful emergent phenomena.

Further development of democratic processes

From an overarching perspective, the development of human AI necessitates the implementation of novel methodologies to enhance and reinforce democratic processes in the 21st century. The published UN report, entitled Governing AI for Humanity, The Final Report (2024) underscores the necessity for a global, inclusive approach to AI development and governance. In order to guarantee the incorporation of AI systems into the political decision-making process and to address intricate social challenges in a more transparent and ethical manner, it is imperative to develop standardised, transparent and ethical AI solutions, as recommended in the UN report. Such measures must be supported by appropriate governance structures and capacity-building measures in order to improve the participation and representation of citizens (Helbing et al., 2017).

This necessitates the development of designs for AI-supported platforms that are capable of analysing and synthesising vast quantities of citizen feedback in a manner that is both intelligible and unaltered, with the objective of obtaining a more intricate representation of public opinion (Landemore, 2020). This enables the construction of simulation models capable of elucidating the long-term consequences of political decisions, thereby facilitating more informed discourse (Celi et al., 2021). The implementation of blockchain-based voting systems could enhance the transparency and security of electoral processes (Pawlak et al., 2018). Similarly, the utilisation of AI assistants could facilitate citizens’ navigation of intricate political matters (Savaget et al., 2019). It is of the utmost importance to note that these technologies are designed to complement, rather than replace, human deliberation and decision-making (Danaher, 2016). A well-designed human-AI collaboration for democratic processes aims to enhance the collective intelligence of society and foster a more inclusive, responsive, and sustainable democracy (Mulgan, 2018).

The status quo of AGI research

AGI research has made significant advancements in recent years, particularly through groundbreaking developments in the field of machine learning and neural networks (Goertzel & Pennachin, 2007). Nevertheless, the development of human-like general intelligence remains a significant challenge. A significant challenge lies in the integration of distinct cognitive abilities into a unified system (Lake et al., 2017).

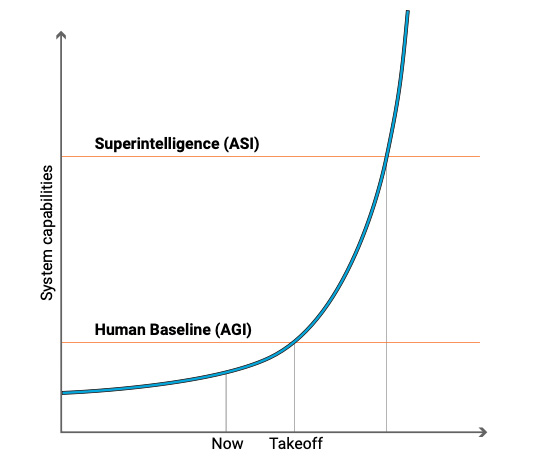

In his book The Singularity Is Near (2005), Ray Kurzweil predicted that the technological singularity would occur around 2045, when artificial intelligence would surpass human intelligence and a superintelligence (ASI) would emerge. Ben Goertzel takes a much shorter-term perspective in his current book The Consciousness Explosion (2024). He assumes that once a human-like AI (HLAGI) has been achieved, the development towards an artificial superintelligence (ASI) will follow very quickly, as this AI will be able to study, improve and scale itself. Goertzel does not see this development taking decades, but rather suggests that we are already in the final phase before the breakthrough to an HLAGI (see Fig. 1).

The economic competition for market share and resources makes the coordinated and ethically robust development of AGI systems a challenging endeavour (Baum, 2017). There is a risk that the competitive dynamics between companies and nations will be replicated at the level of AI systems, with the potential for destabilising consequences for the global order.

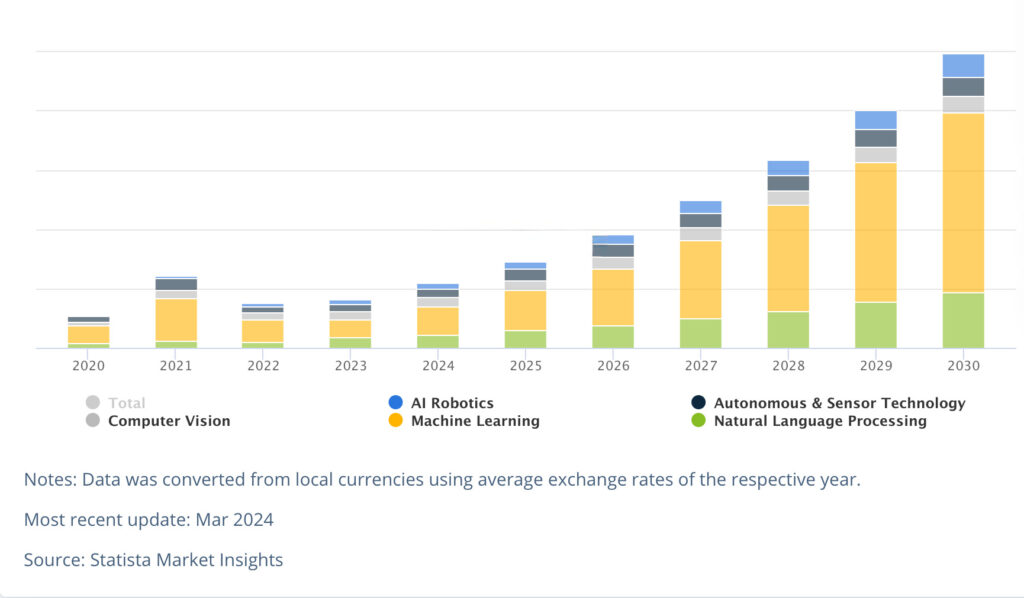

The global AI market is exhibiting substantial growth, with a projected expansion from USD 184 billion in 2024 to USD 826.7 billion by 2030. This corresponds to a compound annual growth rate (CAGR) of 28.46% (Statista 2024). Of particular interest is the development in the field of computer vision, which enables computers to interpret and understand digital image and video data. In accordance with the most recent projections, this submarket is anticipated to reach a valuation of USD 41.3 billion by 2028 (Markets and Markets, 2023). In comparison, the machine learning market, which deals with the development of self-learning algorithms, is forecast to experience even faster growth, reaching USD 209.91 billion by 2029 (Fortune Business Insights, 2024). These disparate growth rates reflect the central importance of machine learning as a foundational technology, while computer vision, as a field of application, encompasses more specific yet no less significant areas of use.

World Models as a starting point

The world models proposed by Yann LeCun (2022) represent a promising avenue for the advancement of AGI. The objective is to equip AI systems with a comprehensive understanding of the physical and social world. World models could provide a foundation for a global human-AI design, for instance by incorporating a discrete SDG module into a world model.

It seems probable that the vision of artificial superintelligence will not manifest as a single system in the future. Rather, it is likely to emerge as a multitude of artificially intelligent systems that can be understood as a single entity, analogous to how the diversity of people is understood as humanity. This is why there will also be a plurality of world models. Nevertheless, these can already be regarded as a common foundation for the interaction between disparate AI systems and humans.

From world models to smart household appliances

A global human-AI design will encompass a multitude of levels of human-machine interaction, ranging from highly intricate world models to the everyday smart home appliances that facilitate our lives. To illustrate, smart appliances may incorporate localised versions of world models that interact with the global models. To illustrate, a smart refrigerator could not only optimise food consumption but also integrate global sustainability goals into people’s everyday lives. This could be achieved, for instance, by making recommendations for climate-friendly nutrition.

References:

Bailey, K. D. (1990). Social entropy theory. State University of New York Press.

Baum, S. D. (2017). On the promotion of safe and socially beneficial artificial intelligence. AI & Society, 32(4), 543-551.

Bostrom, N. (2014). Superintelligence: Paths, dangers, strategies. Oxford University Press.

Celi, L. A., Cellini, J., Charpignon, M. L., Dee, E. C., Dernoncourt, F., Eber, R., … & Wornow, M. (2021). Sources of bias in artificial intelligence that perpetuate healthcare disparities—A global review. PLOS Digital Health, 1(3), e0000022.

Danaher, J. (2016). The threat of algocracy: Reality, resistance and accommodation. Philosophy & Technology, 29(3), 245-268.

Fortune Business Insights. (2024). Machine Learning Market Size & Share Analysis. https://www.fortunebusinessinsights.com/machine-learning-market-102226

Goertzel, B., & Pennachin, C. (Eds.). (2007). Artificial general intelligence. Springer.

Goertzel, B. (2024). The Consciousness Explosion: A Mindful Human’s Guide to the Coming Technological and Experiential Singularity. With Gabriel Axel Montes. Humanity+ Press

Goldstein, J. (1999). Emergence as a construct: History and issues. Emergence, 1(1), 49-72.

Helbing, D., Frey, B. S., Gigerenzer, G., Hafen, E., Hagner, M., Hofstetter, Y., … & Zwitter, A. (2017). Will democracy survive big data and artificial intelligence? Scientific American, 25.

Kurzweil, R. (2005). The Singularity is Near: When Humans Transcend Biology. Viking Press.

Lake, B. M., Ullman, T. D., Tenenbaum, J. B., & Gershman, S. J. (2017). Building machines that learn and think like people. Behavioral and Brain Sciences, 40, e253.

Landemore, H. (2020). Open democracy: Reinventing popular rule for the twenty-first century. Princeton University Press.

LeCun, Y. (2022). A path towards autonomous machine intelligence. OpenReview.

Markets and Markets. (2023). Computer Vision Market by Component. https://www.marketsandmarkets.com/Market-Reports/computer-vision-market-186494767.html

Mulgan, G. (2018). Big mind: How collective intelligence can change our world. Princeton University Press.

Pawlak, M., Poniszewska-Marańda, A., & Kryvinska, N. (2018). Towards the intelligent agents for blockchain e-voting system. Procedia Computer Science, 141, 239-246.

Rosa, H. (2016). Resonanz: Eine Soziologie der Weltbeziehung. Suhrkamp Verlag.

Savaget, P., Chiarini, T., & Evans, S. (2019). Empowering political participation through artificial intelligence. Science and Public Policy, 46(3), 369-380.

Shneiderman, B. (2029). Human-AI design: Bridging the gap between artificial intelligence and human creativity. MIT Press.

Statista Market Insights. (2024). The market size in the Artificial Intelligence market worldwide. https://www.statista.com/outlook/tmo/artificial-intelligence/worldwide

United Nations, (2024). Governing AI for Humanity. Final Report. United Nations, AI Advisory Body. https://www.un.org/en/ai-advisory-body